- 1. What does the industry actually mean by 'responsible AI'?

- 2. What's driving the focus on responsible AI today?

- 3. The 6 core principles of responsible AI

- 4. Why do so many responsible AI efforts fail in practice?

- 5. How to implement responsible AI in the real world

- 6. What frameworks and standards guide responsible AI?

- 7. What's different about responsible AI for GenAI?

- 8. Responsible AI FAQs

- What does the industry actually mean by 'responsible AI'?

- What's driving the focus on responsible AI today?

- The 6 core principles of responsible AI

- Why do so many responsible AI efforts fail in practice?

- How to implement responsible AI in the real world

- What frameworks and standards guide responsible AI?

- What's different about responsible AI for GenAI?

- Responsible AI FAQs

What Is Responsible AI? Principles, Pitfalls, & How-tos

- What does the industry actually mean by 'responsible AI'?

- What's driving the focus on responsible AI today?

- The 6 core principles of responsible AI

- Why do so many responsible AI efforts fail in practice?

- How to implement responsible AI in the real world

- What frameworks and standards guide responsible AI?

- What's different about responsible AI for GenAI?

- Responsible AI FAQs

Responsible AI is the discipline of designing, developing, and deploying AI systems in ways that are lawful, safe, and aligned with human values.

It involves setting clear goals, managing risks, and documenting how systems are used. That includes processes for oversight, accountability, and continuous improvement.

What does the industry actually mean by 'responsible AI'?

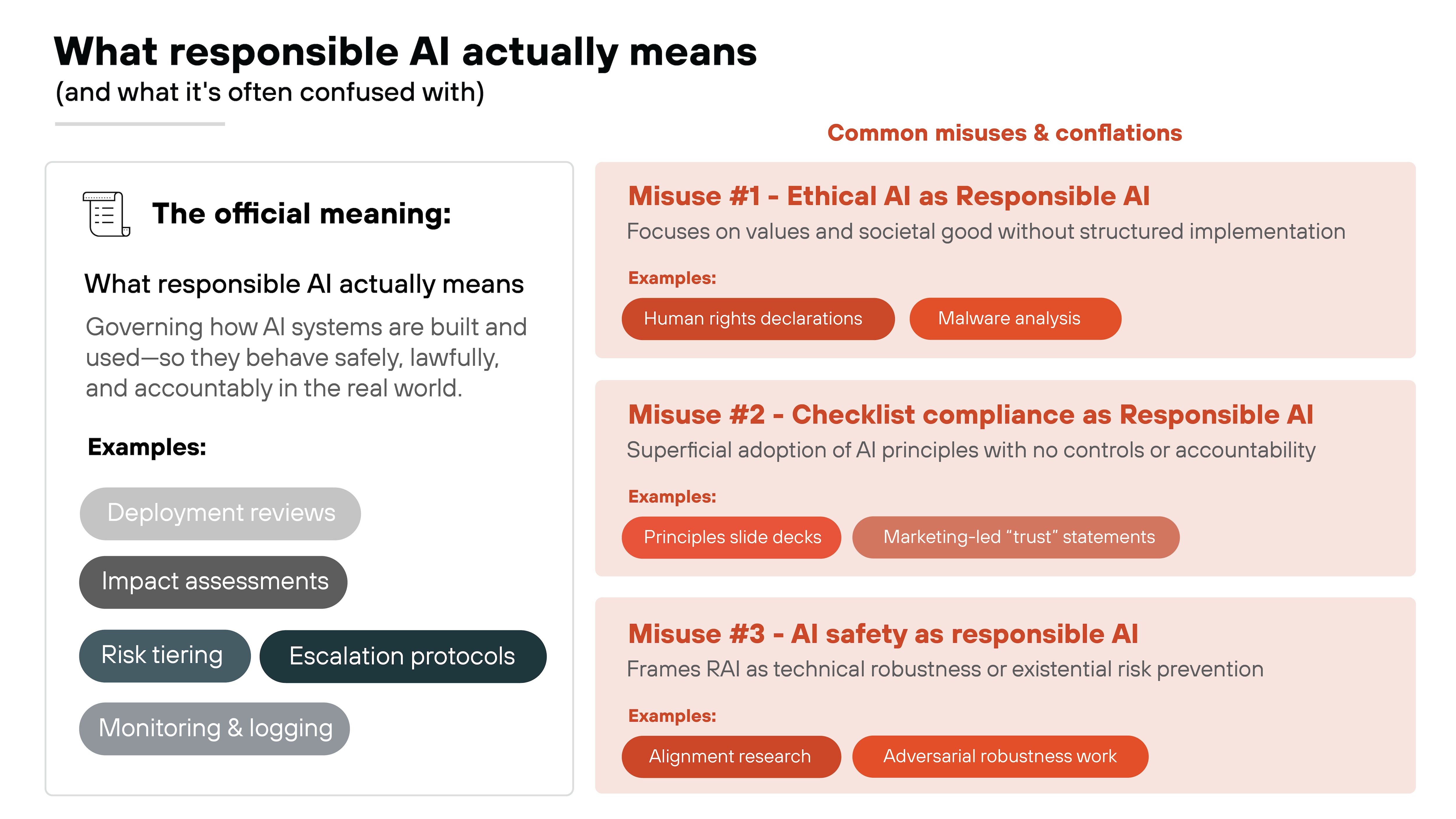

The phrase responsible AI gets used a lot. But in practice, the term still gets applied inconsistently.

Some use it to describe high-level ethical values. Others treat it like a checklist for compliance. And some use it interchangeably with concepts like trustworthy AI or AI safety.

That's a problem.

Because without a clear definition, it's hard to build a real program around it.

At its core, responsible AI refers to how AI systems are governed so they behave safely, lawfully, and accountably in the real world. It's about managing risk, ensuring oversight, and making sure the system does what it's supposed to do without causing harm.

But the term often gets conflated with three different concepts:

-

Responsible AI as governance discipline: Building structures, controls, and reviews to govern how AI is designed, deployed, and monitored.

-

Ethical AI as intent or philosophy: Centering human values, rights, and societal norms, often without concrete implementation steps.

-

AI safety as technical robustness: Preventing accidents, adversarial failures, or long-term existential risks, especially in advanced systems.

All three are valid areas of concern. But they're not the same.

This article focuses on responsible AI as a practical governance discipline: what organizations can do to ensure their AI systems are trustworthy, traceable, and under control throughout their lifecycle.

What's driving the focus on responsible AI today?

AI is no longer a behind-the-scenes tool. It makes decisions, generates content, and interacts directly with people. And when it fails, the consequences aren't hypothetical.

Why?

Because those failures are already happening.

Hallucinated medical advice. Toxic or misleading content. Job candidates filtered out unfairly. All from AI systems that weren't built—or governed—with enough safeguards.

At the same time, generative AI is scaling fast.

It's being embedded into search engines, browsers, customer service platforms, and creative workflows. Which means: the stakes are higher. And the room for error is smaller.

Not to mention, regulators are watching.

So are customers, employees, and internal compliance teams. They want to know how AI decisions are made, who's accountable, and what happens when something goes wrong.

This is where responsible AI comes in.

And it's important to be clear about what that actually means.

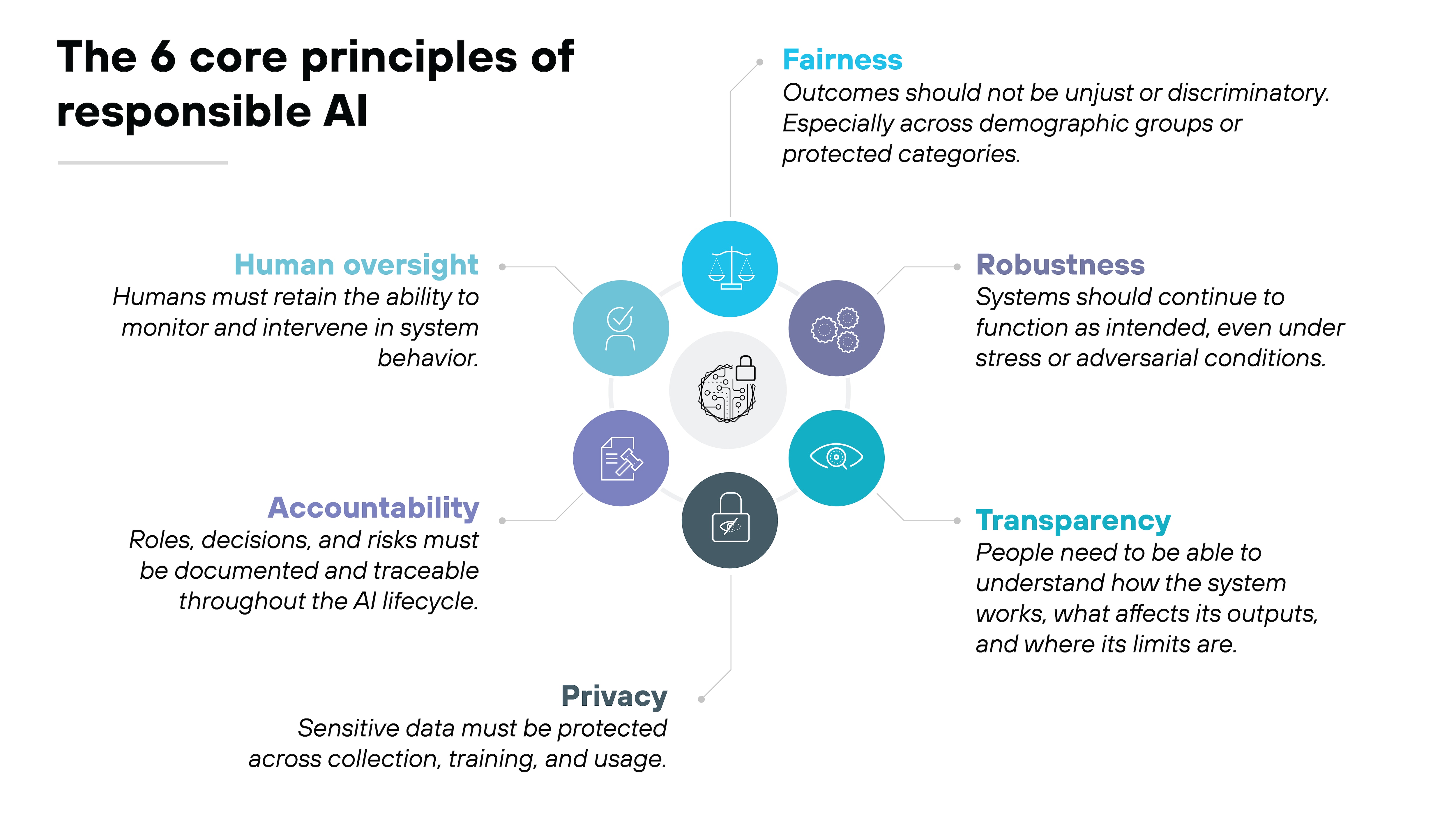

The 6 core principles of responsible AI

Responsible AI begins with shared principles.

They set the foundation for how AI systems should be developed, deployed, and governed.

But these principles aren't just abstract values.

They define what trustworthy behavior looks like in real systems and they guide the decisions teams make at every stage of the AI lifecycle.

Let's break down each principle and why it matters.

1. Fairness

Fairness means systems should not create discriminatory, exclusionary, or unjust outcomes. Especially across demographic groups or protected categories. This includes how training data is sourced, how models are evaluated, and how edge cases are handled.

Without fairness controls, AI bias can quietly propagate through the system.

2. Robustness

Robustness means the system behaves reliably. Even when it's under stress, exposed to unusual inputs, or targeted by attackers.

Examples include degraded data quality, system failures, and edge conditions. Without robustness, a model that performs well in testing can break down in deployment.

3. Transparency

Transparency makes the system understandable. That includes explaining how inputs affect outputs, surfacing known limitations, and enabling meaningful review.

Without transparency, stakeholders can't evaluate the system's behavior or trust its results.

4. Privacy

Privacy protects sensitive data from exposure, misuse, or overretention.

That spans from data collection to training pipelines to user logs. Without privacy safeguards, systems can inadvertently leak personal information or violate policy and regulatory expectations.

5. Accountability

Accountability means someone owns the outcome.

Roles, decisions, and risks have to be clearly documented and traceable across the AI lifecycle. Without it, organizations lose control over how AI systems behave and who's responsible when they fail.

6. Human oversight

Human oversight ensures people remain in control.

It includes setting override protocols, defining intervention triggers, and reviewing system performance in context. Without oversight, automation can drift beyond its intended role without anyone noticing.

Now let's map those principles to lifecycle touchpoints where they need to show up in practice.

As you can see in the table below, these principles don't exist in isolation. For example, increasing fairness may require collecting demographic data, raising new privacy risks.

| Responsible AI principles across the system lifecycle |

|---|

| Principle | Lifecycle touchpoints | Example action |

|---|---|---|

| Fairness | Data selection, evaluation | Run bias audits across subgroups. Document known limitations. |

| Robustness | Testing, deployment, monitoring | Conduct adversarial stress tests. Validate inputs. Monitor for instability. |

| Transparency | Design, deployment | Publish model documentation. Explain how outputs are generated. |

| Privacy | Data ingestion, storage, logs | Minimize use of sensitive data. Apply masking or redaction. Log access. |

| Accountability | All lifecycle stages | Assign owners. Document decisions. Establish clear escalation paths. |

| Human oversight | Deployment, monitoring | Define override protocols. Track how and when humans intervene. |

Which means responsible AI isn't about maximizing any single value.

It's about navigating tradeoffs with structure, documentation, and judgment. When principles are grounded in lifecycle actions, they become easier to apply and easier to enforce.

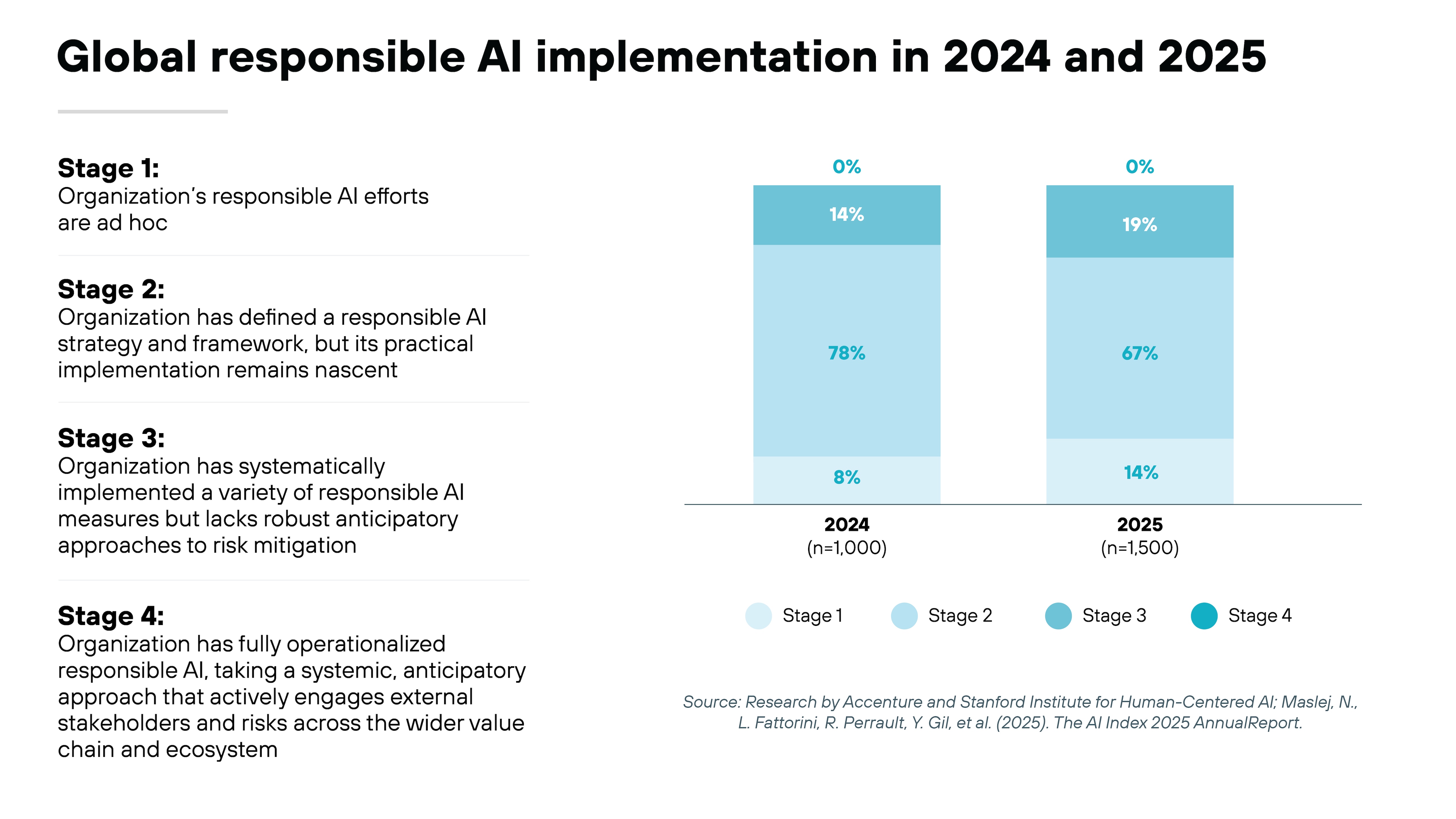

Why do so many responsible AI efforts fail in practice?

Many organizations have launched responsible AI initiatives. Fewer have sustained them. Even fewer have made them work in real systems.

In fact, recent research shows that fewer than 1% of companies were assessed at the highest maturity stage for responsible AI. Most are still stuck at the earliest maturity stages with little real governance in place.

Why?

Because most failures don't come from lack of interest. They come from poor structure.

When principles aren't paired with process, oversight fades and nothing sticks. This is especially common in organizations that treat responsible AI as a side effort rather than a formal discipline with defined roles and repeatable controls.

You can see the patterns across sectors, industries, and regions.

A system gets deployed. A decision is made. Something goes wrong. And there's no clear way to explain what happened, who approved it, or how to prevent it next time.

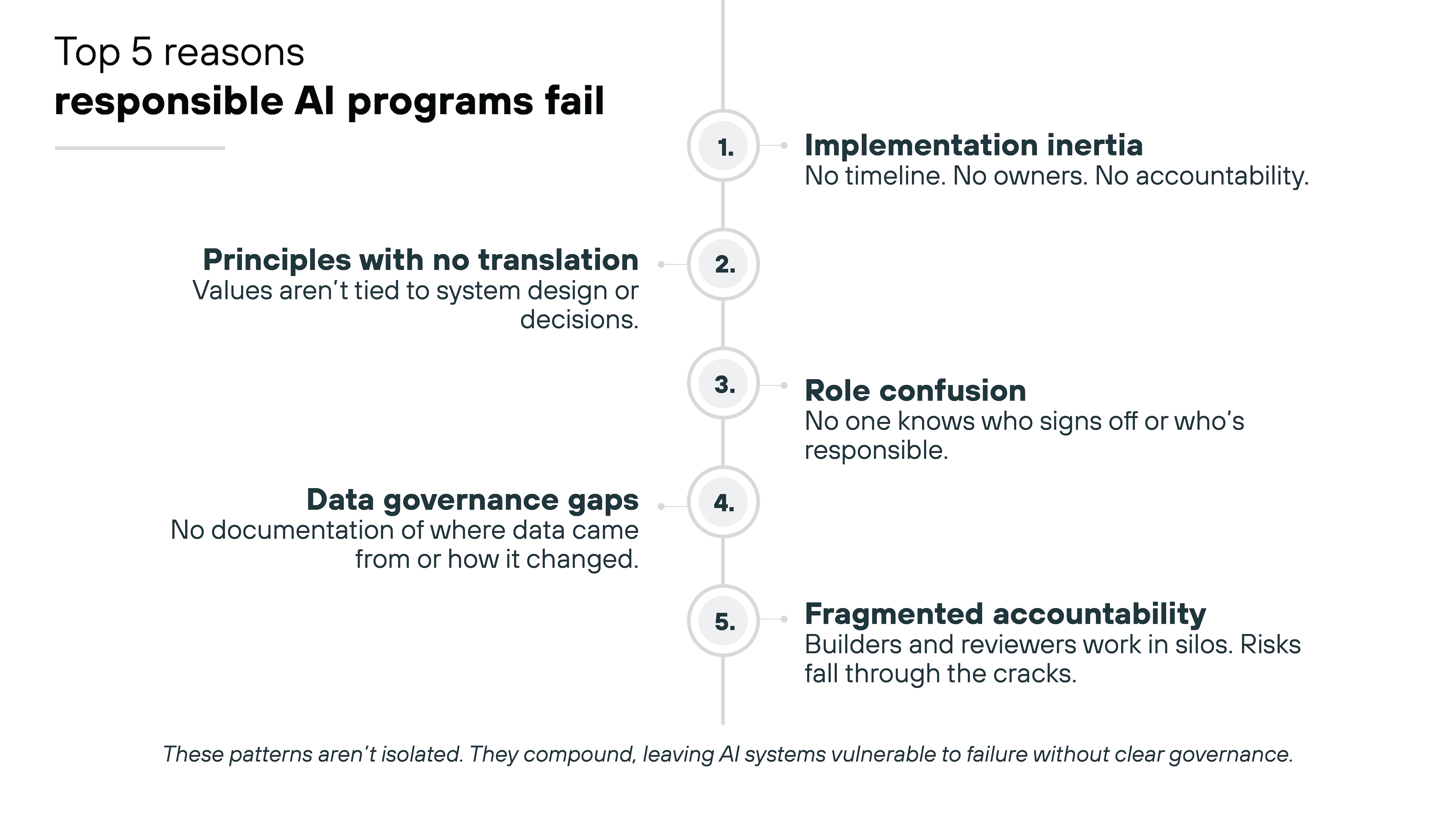

Here's where responsible AI most often breaks down:

Implementation inertia

Responsible AI programs often stall after the principles phase.

Leadership supports the idea. Teams express interest. But there's no timeline. No path to execution. And no consequences when tasks are missed.

Without incentives, enforcement, or escalation paths, the initiative fades into background noise.

Principles with no operational translation

Many programs publish values like fairness, transparency, or accountability. But they don't define what those values mean for system design, data curation, or model monitoring.

Teams are left to interpret the guidance on their own. That leads to inconsistency and gaps in coverage.

Role confusion

Who's responsible for bias testing? Who owns model documentation? Who approves risk reviews before launch? In many cases, no one knows.

Responsibilities are spread across policy, legal, and engineering. But the handoffs are unclear. And when something fails, the accountability trail is hard to follow.

Data governance gaps

The system depends on data. But the data isn't documented. There's no record of where it came from, how it was modified, or who had access.

That makes it harder to explain how a model works or why it produced a given result. It also makes it harder to respond when harm occurs.

Fragmented accountability

Responsible AI reviews are often disconnected from day-to-day development.

The people reviewing risks don't work on the system. The people building the system don't engage with the governance process.

As a result, ownership becomes distributed but diluted. And critical gaps go unnoticed.

These aren't isolated issues. They tend to compound. One weak link leads to another. And the result is a responsible AI program that exists in principle but never in practice.

The next section breaks down how to move from principles to implementation at both the system and program level.

Free AI Risk Assessment

Get a complimentary vulnerability assessment of your AI ecosystem.

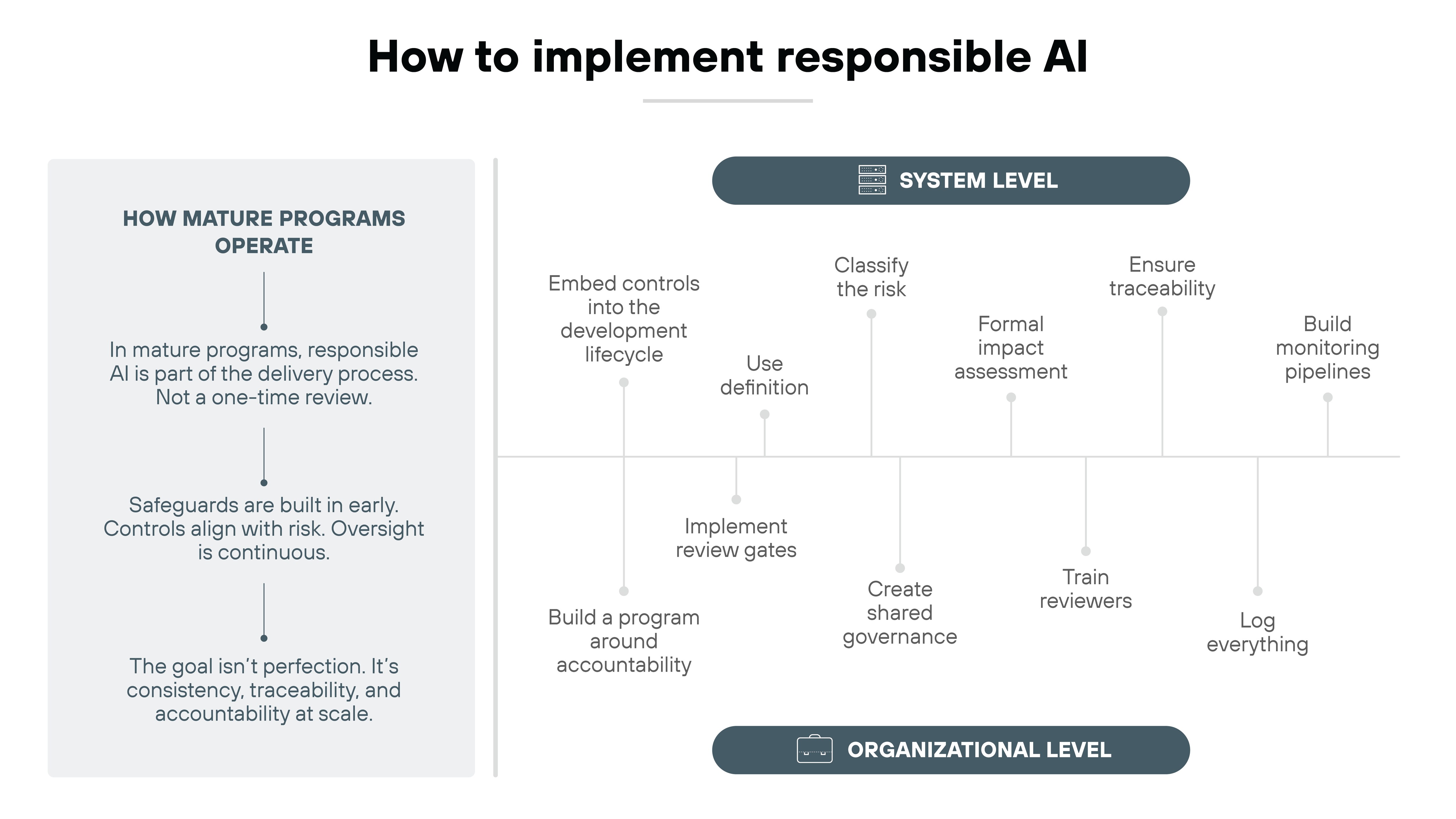

Claim assessmentHow to implement responsible AI in the real world

Principles aren't enough.

Even the best-intentioned responsible AI programs fall short without clear implementation steps.

Success depends on what you build and how you govern it across the full AI lifecycle.

There are two main dimensions to focus on:

- What your teams do at the project level

- And how your organization supports it at the program level

Let's start with the system itself.

System level: Embed controls into the development lifecycle

Start with use definition.

Be explicit about what the model is for and what it isn't. Don't forget prohibited uses, even if they seem indirect or unlikely. Because deployment context shapes risk.

A model optimized for efficiency could end up excluding high-need users without proper constraints. So define the intended purpose, document guardrails, and outline misuse scenarios from the outset.

Tip:Map misuse scenarios to specific user behaviors, not just technical boundaries.Then classify the risk.

Not every model needs the same level of scrutiny. Some assist humans. Others make high-impact decisions. The risk tier should determine how deep your safeguards go.

Use a formal impact assessment.

Evaluate stakeholder harms, use context, and system behavior. This won't replace technical testing. But it will guide it.

Ask: Who might this system affect? How? Under what conditions?

Tip:Use impact assessments to flag where safeguards may conflict like fairness vs. privacy.Ensure traceability.

Track data lineage, configuration history, and decision logic.

Because when something goes wrong, you'll need to retrace the path. You can't do that without documentation.

Build monitoring pipelines.

Don't just track performance metrics. Add drift detection, outlier alerts, and escalation triggers. Something needs to alert you when the system starts to behave in ways it shouldn't.

And when that happens, have a defined escalation path: name the person responsible. Spell out the triggers.

Without that, monitoring becomes passive observation.

Tip:Define escalation thresholds before launch. Don't wait to invent them under pressure.

Organizational level: Build a program around accountability

Start with review gates.

Don't greenlight model launches without a second set of eyes.

Require approval from a responsible AI lead or cross-functional review group based on the system's risk tier. Because risk isn't always obvious to the team building the model.

Review adds distance. And distance reveals assumptions.

Tip:Map misuse scenarios to specific user behaviors, not just technical boundaries.Create shared governance.

Don't let responsible AI sit with a single team. Assign clear roles across AI engineering, legal, product, and compliance.

And document the handoffs. Vague ownership is where oversight breaks down.

Review adds distance. And distance reveals assumptions.

Tip:Assign roles along with clear decision and escalation authority.Train your reviewers.

If someone is expected to flag issues, make sure they understand the system. And how it works. Otherwise, the review process becomes a formality.

Tip:Give reviewers direct access to full model documentation, including configs and decision logic.Log everything.

Not just for audits. But to preserve memory over time. What was reviewed. What was flagged. What was approved. And why.

That's how you create continuity. And it's how future decisions get better.

Evolve from reactive fixes to embedded safeguards

Launching a responsible AI program is just the start. To make it sustainable, the practices need to evolve.

Instead of reacting to issues after deployment, mature programs build safeguards into system design. Controls are tied to risk tiers. Escalation paths and governance become part of the delivery process. Not side workflows.

That's the shift from intention to integration. Where responsible AI isn't just approved. It's applied.

Frameworks and standards can help structure these practices.

The next section outlines the most widely used models—and how they support governance, risk, and implementation across the AI lifecycle.

Interactive tour: Prisma AIRS

See firsthand how Prisma AIRS implements AI monitoring, red teaming, and governance controls.

Launch tourWhat frameworks and standards guide responsible AI?

Responsible AI is easier to talk about than to put into practice.

Which means organizations need more than principles. They need clear, structured guidance.

Today, several well‑established frameworks exist. Each supports a different aspect of responsible AI, from governance and risk to implementation and legal compliance.

Here's how they compare:

| Comparison of responsible AI frameworks and standards |

|---|

| Framework / Standard | Issuer | Primary focus | What it adds |

|---|---|---|---|

| ISO/IEC 42001 | ISO/IEC JTC 1/SC 42 | AI management systems | Defines how organizations structure AI governance, roles, policies, and documentation. |

| ISO/IEC 42005 | ISO/IEC JTC 1/SC 42 | AI system impact assessment | Guides teams through system-specific risk reviews, harm identification, and mitigation planning. |

| ISO/IEC 23894 | ISO/IEC | AI risk management | Aligns AI risk handling with ISO 31000 and supports structured analysis across the AI lifecycle. |

| NIST AI RMF 1.0 | U.S. NIST | Trust and risk management | Provides practical lifecycle actions across Govern, Map, Measure, and Manage; useful for implementation teams. |

| EU AI Act | European Commission | Binding regulation | Establishes legal obligations, high-risk system requirements, transparency rules, and conformity assessments. |

| OECD AI Principles | OECD | Global policy baseline | Sets shared expectations for fairness, transparency, robustness, and accountability; influences national policies. |

| UNESCO Recommendation on the Ethics of AI | UNESCO | Ethical and governance guidance | Provides globally endorsed standards for rights, oversight, and long-term societal considerations. |

| WEF Responsible AI Playbook | World Economic Forum | Enterprise practice guidance | Offers practical steps for building responsible AI programs and aligning them to business workflows. |

Important:

These frameworks aren't competing checklists. They cover similar themes but play different roles across governance, risk, implementation, and compliance.

Each one supports a different layer of responsible AI. Used individually or in combination, they help translate principles into systems that are actually governed.

- What Is AI Governance?

- AI Risk Management Frameworks: Everything You Need to Know

- NIST AI Risk Management Framework (AI RMF)

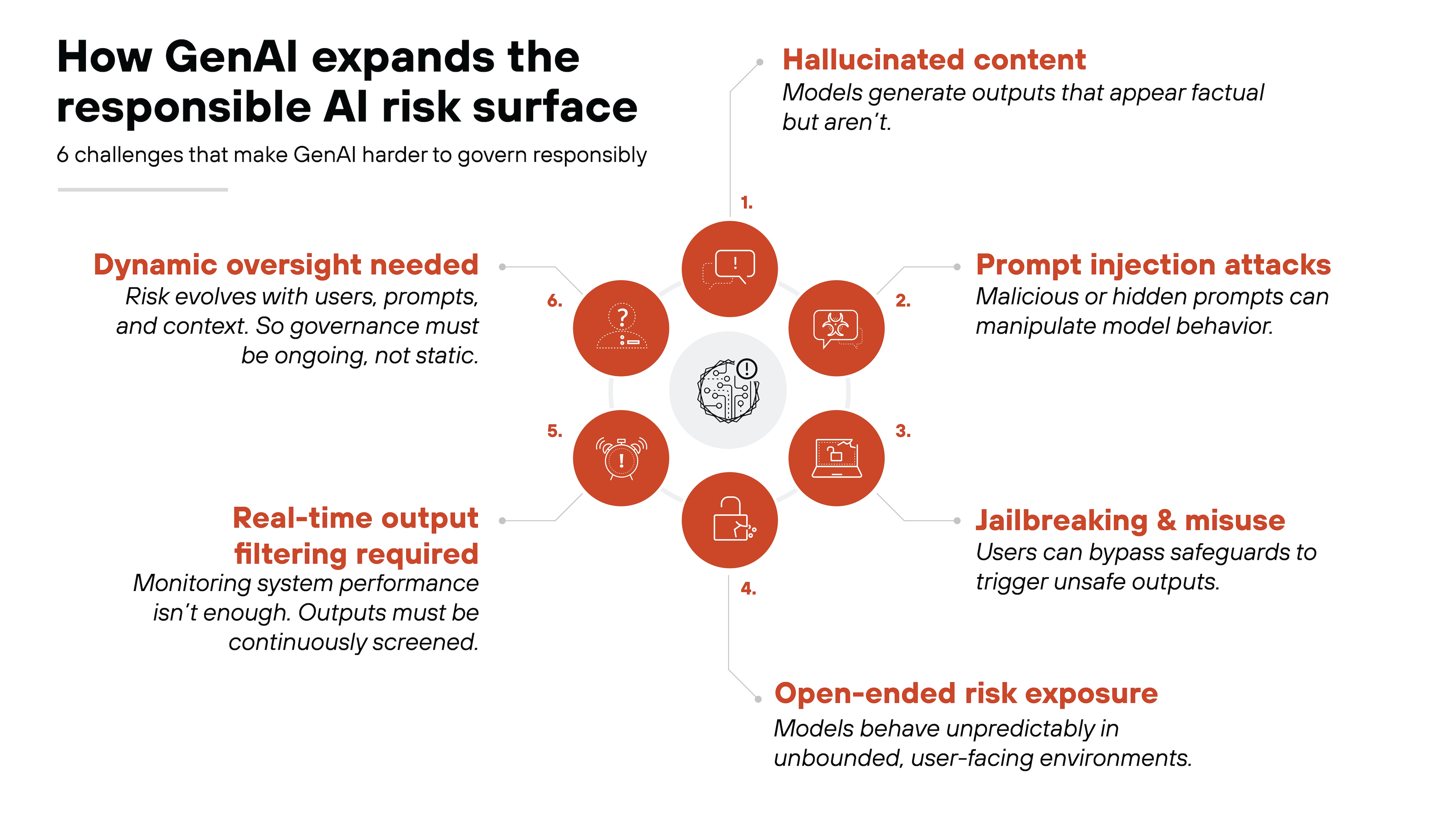

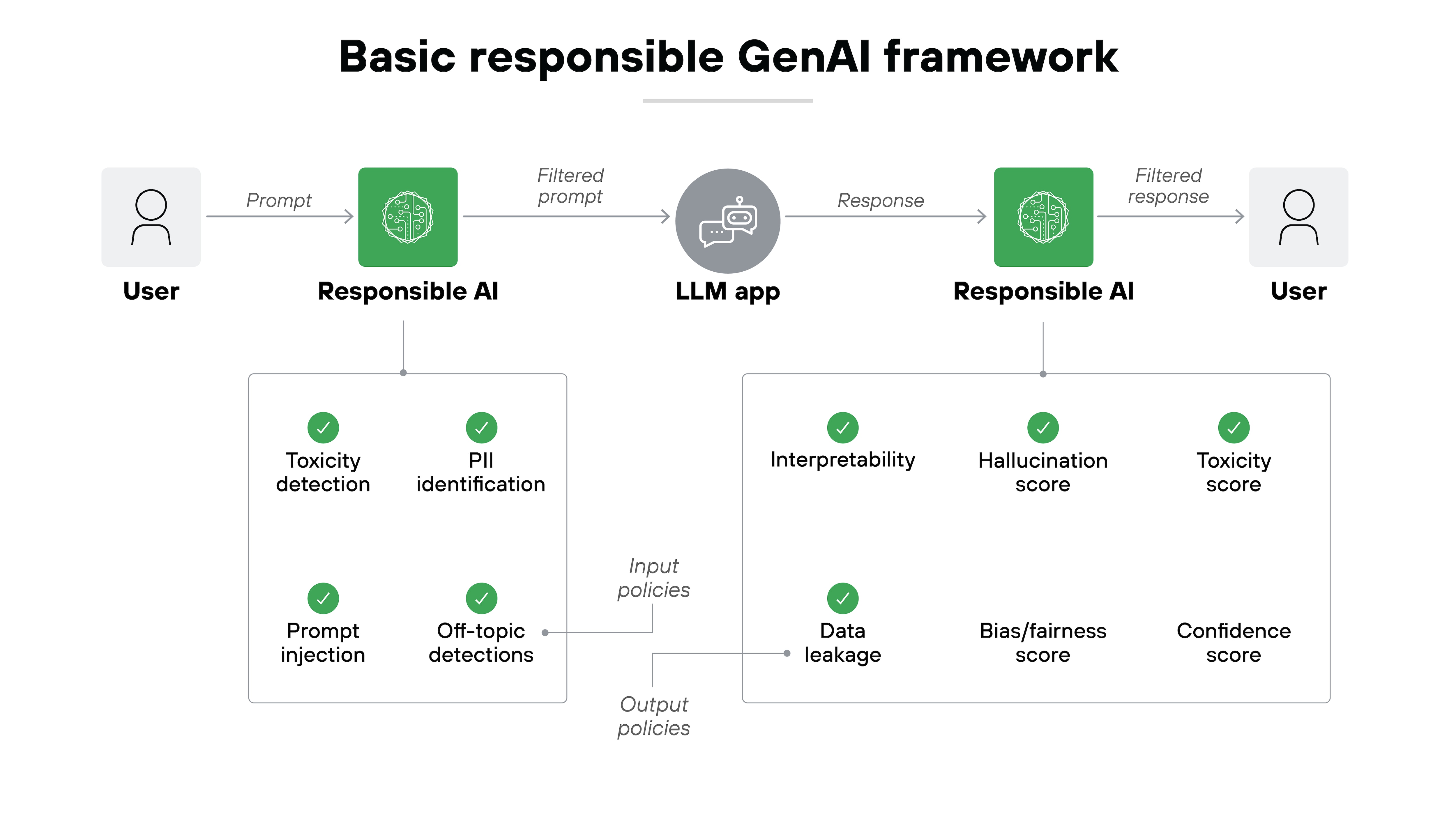

What's different about responsible AI for GenAI?

Generative AI has changed the risk surface.

It's no longer just about models running in the background. These systems now generate content, interact with users, and adapt to inputs in ways that are hard to predict.

So responsible AI needs to account for a new set of challenges. Because many of the GenAI security risks are behavioral—beyond purely statistical or architectural concerns.

Let's start with model behavior.

Large language models can hallucinate. They can be jailbroken. They can respond to prompts that were never anticipated.

Even when the training data is controlled, outputs can still be harmful, misleading, or biased. Especially in open-ended use cases.

And these risks don't decrease with scale. They grow.

Then there's output safety.

It's not enough to monitor system performance. You have to monitor what the model produces.

Content filtering, scoring systems, and UI-level interventions like user overrides or sandboxed generations all play a role.

And that monitoring can't be one-time. It has to be continuous because context shifts as new users, use cases, and adversarial prompts emerge.

On the governance side, monitoring and red teaming have to evolve.

That means behavioral evaluations.

It means testing for prompt injection, jailbreak pathways, and ethical alignment. And it means doing this before deployment. Ideally, before anything goes wrong in production.

These challenges don't replace traditional responsible AI practices. They build on them. What used to rely on one-time reviews now requires ongoing oversight and real-time behavioral monitoring.

In other words:

Risk tiering and impact assessments still matter.

But GenAI also demands systems that can catch harmful outputs and misuse early. Before they escalate at scale.

- Top GenAI Security Challenges: Risks, Issues, & Solutions

- How to Build a Generative AI Security Policy

- What Is AI Prompt Security? Secure Prompt Engineering Guide

- What Is AI Red Teaming? Why You Need It and How to Implement