- 1. What is an AI security policy?

- 2. Why do organizations need a GenAI security policy?

- 3. What should an AI security policy include?

- 4. How to implement an effective AI security policy

- 5. How to use AI standards and frameworks to shape your GenAI security policy

- 6. Who should own the AI security policy in the organization?

- 7. AI security policy FAQs

- What is an AI security policy?

- Why do organizations need a GenAI security policy?

- What should an AI security policy include?

- How to implement an effective AI security policy

- How to use AI standards and frameworks to shape your GenAI security policy

- Who should own the AI security policy in the organization?

- AI security policy FAQs

How to Build a Generative AI Security Policy

- What is an AI security policy?

- Why do organizations need a GenAI security policy?

- What should an AI security policy include?

- How to implement an effective AI security policy

- How to use AI standards and frameworks to shape your GenAI security policy

- Who should own the AI security policy in the organization?

- AI security policy FAQs

An effective generative AI security policy can be developed by aligning policy goals with real-world AI use, defining risk-based rules, and implementing enforceable safeguards.

It should be tailored to how GenAI tools are used across the business, not just modeled after general IT policy. The process includes setting access controls, defining acceptable use, managing data, and assigning clear responsibility.

What is an AI security policy?

An AI security policy is a set of rules and procedures that define how an organization governs the use of artificial intelligence—especially generative AI. It outlines what's allowed, what's restricted, and how to manage AI-related risks like data exposure, model misuse, and unauthorized access.

Basically, it's a formal way to set expectations for safe and responsible AI use across the business. That includes third-party AI tools, in-house models, and everything in between.

The goal of the policy is to protect sensitive data, enforce access controls, and prevent misuse—intentional or not. It also supports compliance with regulations that apply to AI, data privacy, or sector-specific governance.

For generative AI, the policy often covers issues like prompt input risks, plugin oversight, and visibility into shadow AI usage.

Important:

An AI security policy doesn't guarantee protection. But it gives your organization a baseline for risk management.

A good policy will make it easier to evaluate tools, educate employees, and hold teams accountable for responsible AI use. Without one, it's hard to know who's using what, where the data is going, or what security blind spots exist.

Why do organizations need a GenAI security policy?

Organizations need a GenAI security policy because the risks introduced by generative AI are unique, evolving, and already embedded in how people work.

Employees are using GenAI tools—often without approval—to draft documents, analyze data, or automate tasks. Some of those tools retain input data or use it for model training.

That means confidential business information could inadvertently end up in public models. Without a policy, organizations can't define what's safe or enforce how data is shared.

On top of that, attackers are using GenAI too. They can craft more convincing phishing attempts, inject prompts to override safeguards, or poison training data.

Attackers use AI-driven methods to enable more convincing phishing campaigns, automate malware development and accelerate progression through the attack chain, making cyberattacks both harder to detect and faster to execute.

While adversarial GenAI use is more evolutionary than revolutionary at this point, make no mistake: GenAI is already transforming offensive attack capabilities.”

A policy helps establish how to evaluate and mitigate these risks. And it provides structure for reviewing AI applications, applying role-based access, and detecting unapproved use.

In other words:

A GenAI security policy is the foundation that supports risk mitigation, safe adoption, and accountability. It gives organizations the ability to enable AI use without compromising data, trust, or compliance.

- Top GenAI Security Challenges: Risks, Issues, & Solutions

- What Is a Prompt Injection Attack? [Examples & Prevention]

- What Is AI Prompt Security? Secure Prompt Engineering Guide

- What Is Shadow AI? How It Happens and What to Do About It

Understand your generative AI adoption risk. Learn about the Unit 42 AI Security Assessment.

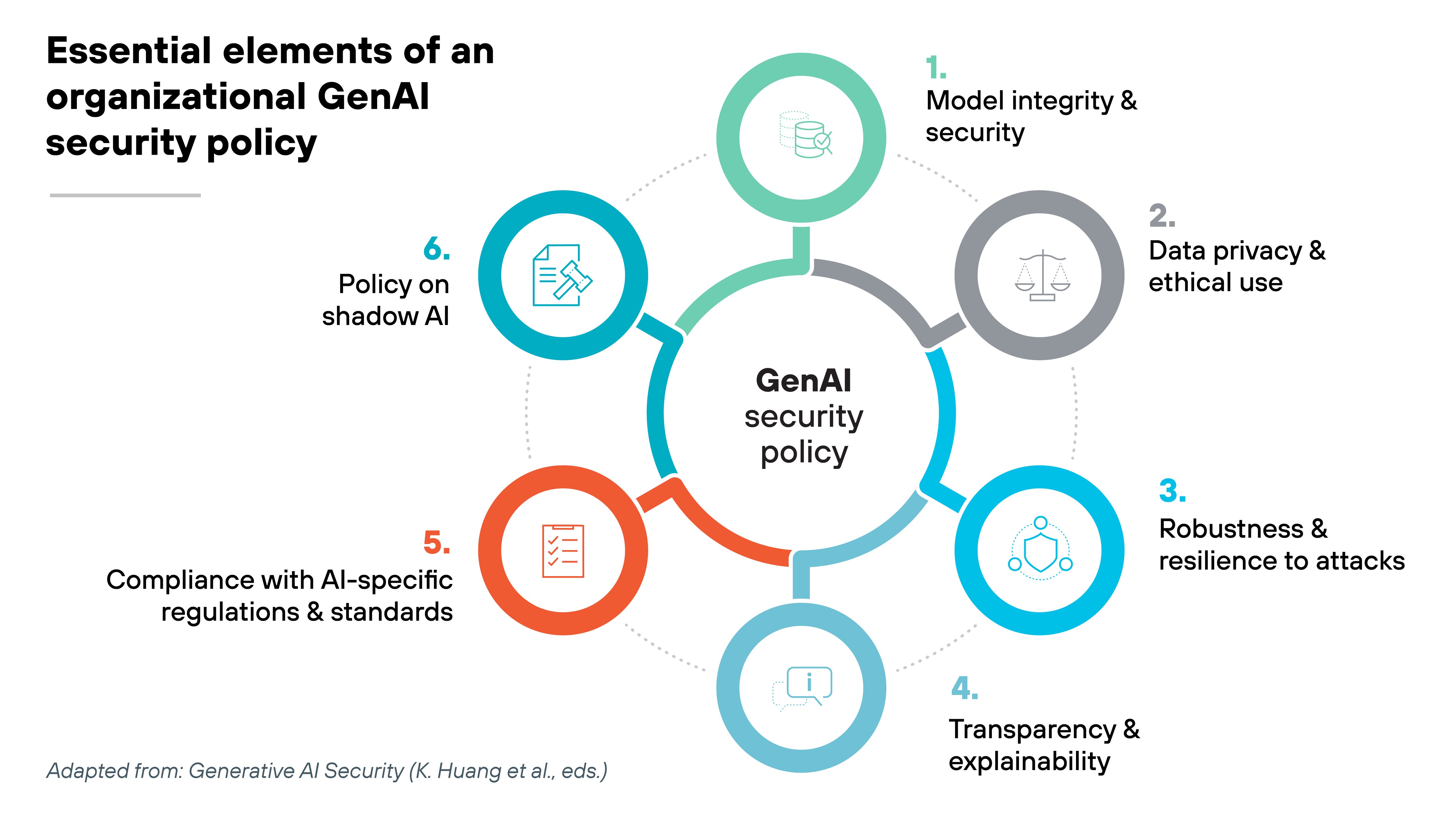

Learn moreWhat should an AI security policy include?

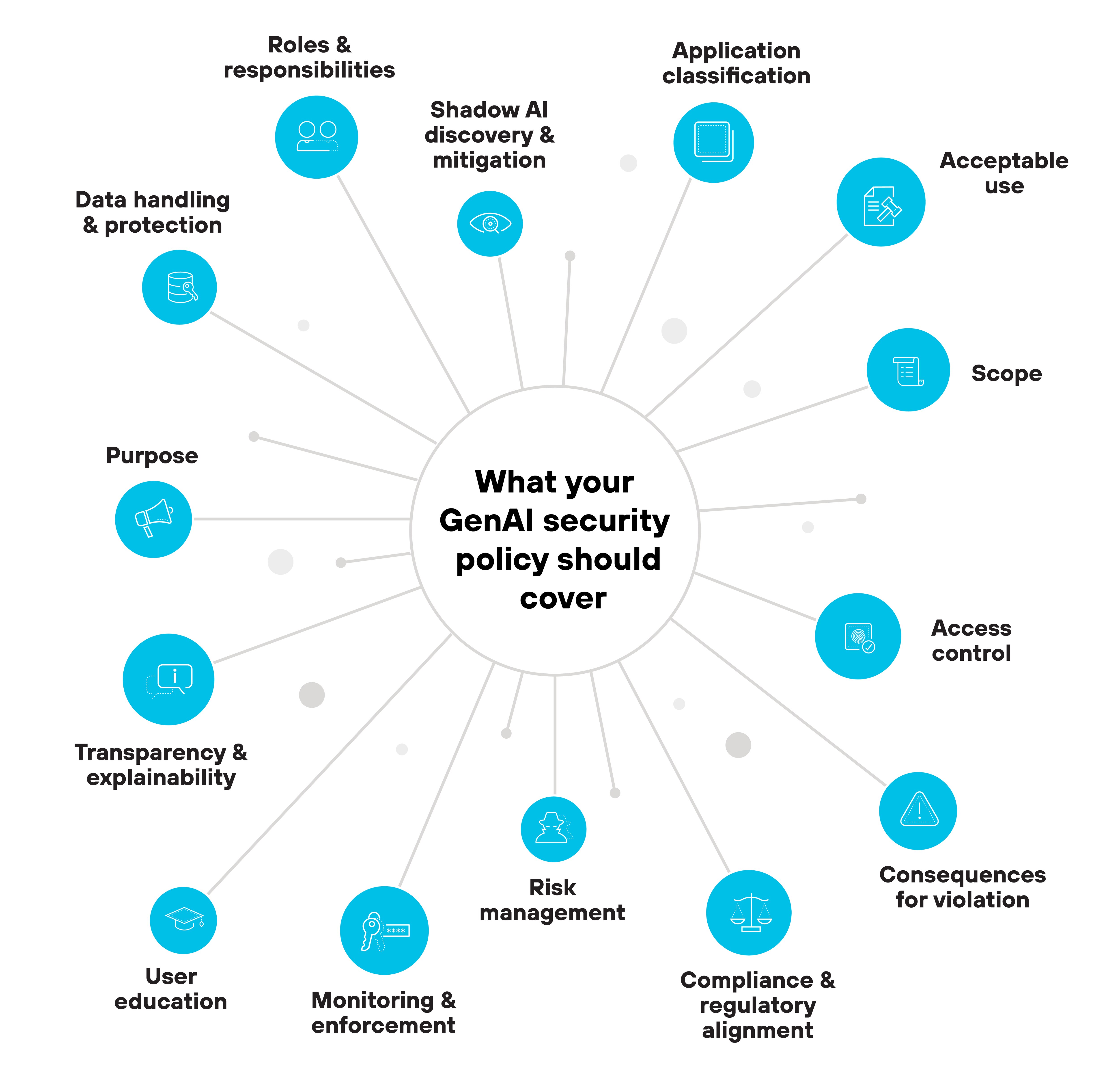

A GenAI security policy needs to be practical, enforceable, and tailored to the way generative AI is actually being used across the business.

That means going beyond generic guidelines and addressing specific risk points tied to tools, access, and behavior.

The following sections explain what your policy should cover and why each part matters:

- Purpose

The policy should start by stating its purpose. This defines why the document exists and what it's trying to achieve. In the case of GenAI, that usually includes enabling safe AI adoption, protecting sensitive data, and aligning usage with ethical and regulatory standards. - Scope

Scope explains where the policy applies. It should identify which teams, tools, systems, and use cases are in scope. Without clear boundaries, it's hard to enforce or interpret what the policy actually governs. - Roles and responsibilities

This section outlines who owns what. It's where you define responsibilities for security, compliance, model development, and oversight. Everyone from developers to business users should know their part in keeping GenAI use secure. - Application classification

GenAI apps should be grouped into sanctioned, tolerated, or unsanctioned categories. Why? Because not all tools pose the same risk. Classification helps define how to apply access controls and where to draw the line on usage. - Acceptable use

This is the part that tells users what they can and can't do. It should specify whether employees can input confidential data, whether outputs can be reused, and which apps are approved for different tasks. - Access control

Granular access policies help restrict usage based on job function and business need. That might mean limiting which teams can use certain models, or applying role-based controls to GenAI plugins inside SaaS platforms. - Data handling and protection

The policy should define how data is used, stored, and monitored when interacting with GenAI. This includes outbound prompt data, generated output, and any AI-generated content stored in third-party systems. It's critical for managing privacy and reducing leakage risk. - Shadow AI discovery and mitigation

Not every GenAI tool in use will be officially approved. The policy should include steps to detect unsanctioned usage and explain how those tools will be reviewed, blocked, or brought under control. - Transparency and explainability

Some regulations require model transparency. Even if yours don't, it's still good practice to document how outputs are generated. This section should explain expectations around model interpretability and what audit capabilities must be built in. - Risk management

AI introduces different types of risks—from prompt injection to data poisoning. Your policy should state how risk assessments are conducted, how often they're reviewed, and what steps are taken to address high-risk areas. - Compliance and regulatory alignment

AI-related regulations are still evolving. But some requirements already exist, especially around data privacy and ethics. The policy should reference applicable standards and describe how the organization plans to stay aligned as those requirements change. - Monitoring and enforcement

Policy is only effective if it's enforced. This section should explain how usage will be logged, what gets reviewed, and how violations are handled. That might include alerting, blocking access, or escalating to HR or legal depending on the issue. - User education

Users play a major role in AI risk. The policy should outline what kind of GenAI training will be required and how employees will stay informed about safe and appropriate use. - Consequences for violation

Finally, your policy should clearly explain what happens if someone breaks the rules. This includes internal disciplinary actions and, where applicable, legal or compliance consequences. Clarity here reduces ambiguity and supports enforcement.

See firsthand how to make sure GenAI apps are used safely. Get a personalized AI Access Security demo.

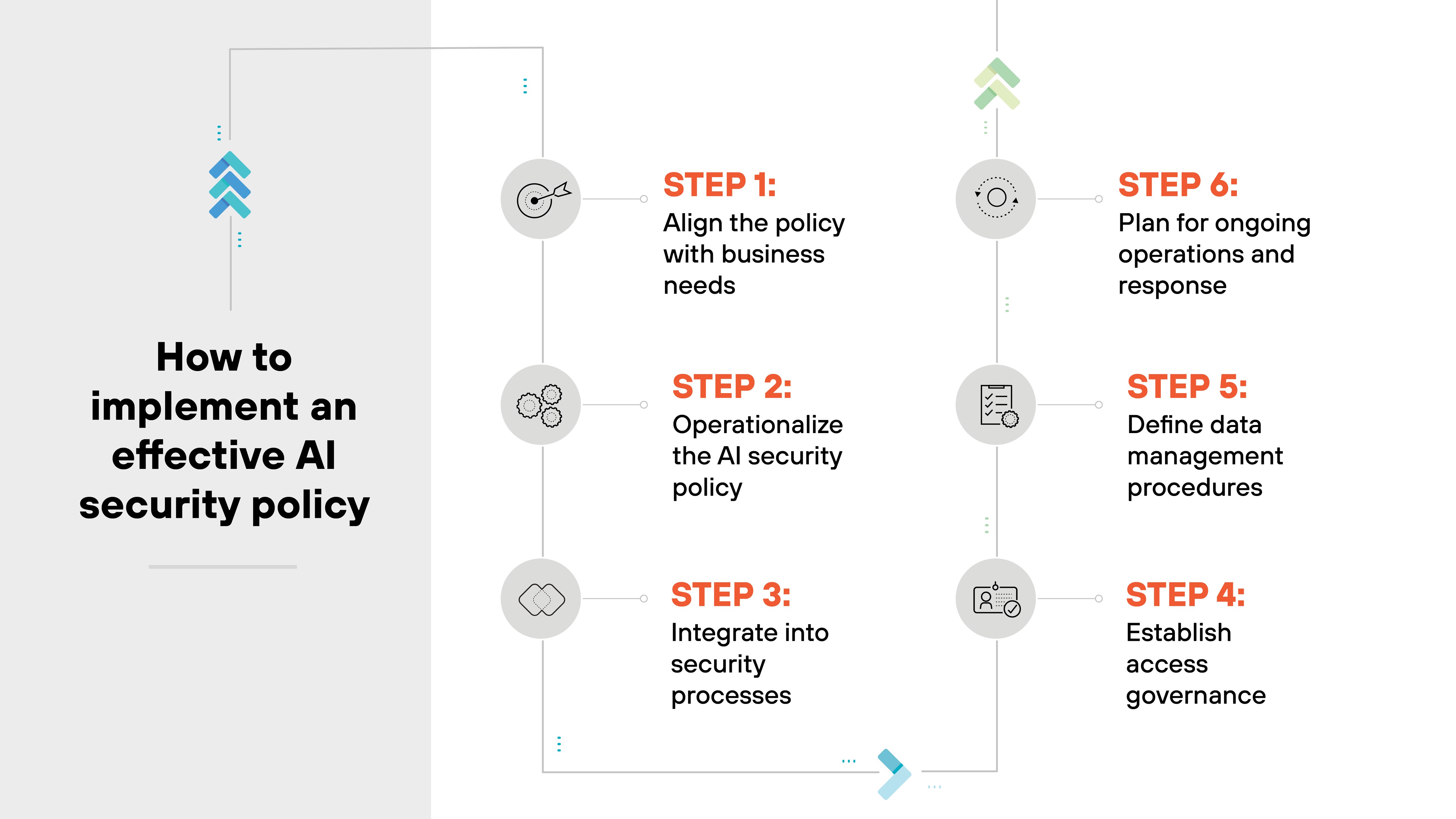

RegisterHow to implement an effective AI security policy

Establishing an AI security policy is only half the battle. The harder part is putting it into practice.

That means turning the policy's goals and rules into real-world actions, systems, and safeguards that can adapt as AI evolves.

Let's walk through the core steps to actually implement an AI security policy in your organization.

Step 1: Align the policy with business needs

Start by understanding what your organization actually does with generative AI.

Are you building models?

Using off-the-shelf apps?

Letting employees try public tools?

Each of these comes with different risks and obligations.

The implementation process should directly reflect how GenAI is used in practice.

That means defining responsibilities, setting clear goals, and making sure the policy fits the organization's size, industry, and existing infrastructure.

Step 2: Operationalize the AI security policy

Once the policy is aligned, it has to be operationalized. That means translating policy statements into concrete processes, controls, and behaviors.

Start by mapping the policy to specific actions.

For example(s):

- If the policy says “prevent unauthorized GenAI use,” then implement app control or proxy rules to block unapproved tools.

- If the policy requires “model confidentiality,” then set up monitoring and data loss prevention for inference requests.

Also make sure you have procedures for onboarding, training, enforcement, and periodic review.

Step 3: Integrate into security processes

AI security doesn't exist in a vacuum. It should be woven into your broader security operations.

That means:

- Incorporating GenAI into threat modeling and risk assessments

- Applying secure development practices to AI pipelines

- Expanding monitoring and incident response to cover AI inputs and outputs

- Maintaining patch and configuration management for models, APIs, and underlying infrastructure

GenAI should be a dimension in your existing controls, not a parallel track.

Step 4: Establish access governance

Effective access governance starts with knowing who is using GenAI and what they're using it for.

Then, you can enforce limits. This includes:

- Verifying identities of all users and systems accessing models

- Controlling access to training data, inference APIs, and GenAI outputs

- Using role-based access control, strong authentication, and audit trails

Remember: GenAI can generate sensitive or proprietary content. If access isn't tightly controlled, misuse is easy—and hard to detect.

Step 5: Define data management procedures

AI models are only as secure as the data they rely on. That's why data handling needs its own set of safeguards.

This step includes:

- Classifying and labeling data used for training or inference

- Enforcing encryption, anonymization, and retention policies

- Monitoring how data is used, shared, and stored

- Setting up secure deletion processes for expired or high-risk data

Important: Many AI incidents stem from overlooked or poorly managed data. Solid data procedures are foundational to any AI security effort.

Step 6: Plan for ongoing operations and response

AI systems change fast. New models get deployed. Old ones get retrained. Threats evolve. So implementation can't be static.

This step covers:

- Monitoring model behavior, user activity, and system logs

- Running regular security assessments and red teaming exercises

- Preparing incident response playbooks specific to GenAI misuse or model compromise

- Maintaining rollback options for model changes or misbehavior

See how to discover, secure, and monitor your AI environment. Take the Prisma AIRS interactive tour.

Start demoHow to use AI standards and frameworks to shape your GenAI security policy

You don't have to start from scratch.

A growing set of AI security standards and frameworks can guide your policy decisions. Especially in areas where best practices are still emerging.

These resources help you do three things:

- Identify and classify risks specific to AI and GenAI.

- Align your policies with regulatory and ethical expectations.

- Operationalize security controls across the GenAI lifecycle.

Let's break down the most relevant frameworks and how they can help.

| Standard or framework | What it is | How to use it in policy development |

|---|---|---|

| MITRE ATLAS Matrix | A framework for understanding attack tactics targeting AI systems | Use it to build threat models, define mitigation strategies, and educate teams about real-world attack scenarios |

| AVID (AI Vulnerability Database) | An open-source index of AI-specific vulnerabilities | Reference it to identify risk patterns and reinforce policy coverage for model, data, and system-level threats |

| NIST AI Risk Management Framework (AI RMF) | A U.S. government framework for managing AI risk | Apply it to shape governance structure, assign responsibilities, and ensure continuous risk monitoring |

| OWASP Top 10 for LLMs | A list of the most critical security risks for large language models | Use it to ensure your policy explicitly addresses common vulnerabilities like prompt injection and data leakage |

| Cloud Security Alliance (CSA) AI Safety Initiative | A set of guidelines, controls, and training recommendations for GenAI | Adopt CSA-aligned controls and map them to your GenAI tools, especially for cloud and SaaS environments |

| Frontier Model Forum | An industry collaboration focused on safe development of frontier models | Use it to stay informed on evolving best practices, particularly if you're using cutting-edge foundation models |

| NVD and CVE Extensions for AI | U.S. government vulnerability listings adapted for AI contexts | Monitor these sources for AI-specific CVEs and apply relevant patches or compensating controls |

| Google Secure AI Framework (SAIF) | A security framework from Google for securing AI systems | Use it to shape secure development and deployment practices, especially in production environments |

- What Is AI Governance?

- AI Risk Management Frameworks: Everything You Need to Know

- NIST AI Risk Management Framework (AI RMF)

- What Is Google's Secure AI Framework (SAIF)?

Test your response to real-world AI infrastructure attacks. Explore Unit 42 Tabletop Exercises (TTX).

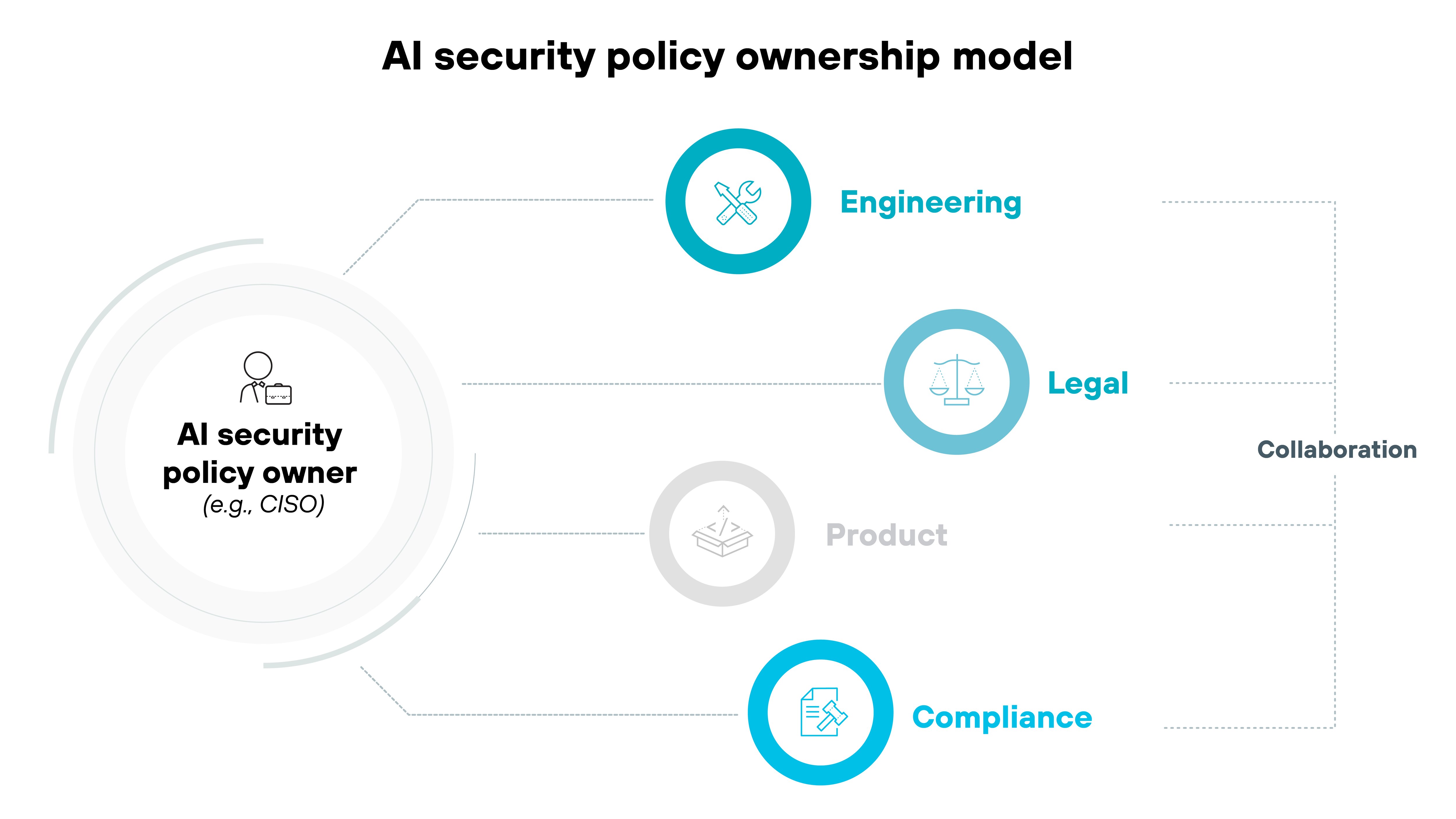

Learn moreWho should own the AI security policy in the organization?

There's no one-size-fits-all owner for an AI security policy. But every organization should assign clear ownership. Ideally to a senior leader or cross-functional team.

Who owns it will depend on how your organization is structured and how deeply GenAI is embedded into your workflows.

What matters most is having someone accountable for aligning the policy to real risks and driving it forward.

In most cases, the CISO or a central security leader should take point. They already oversee broader risk and compliance efforts, so anchoring AI security policy there keeps it integrated and consistent. But they shouldn't act alone.

Here's why:

GenAI risk spans more than cybersecurity. You need legal, compliance, engineering, and product involved too.

Some organizations may benefit from a formal AI governance board. Others might designate domain-specific policy owners or security champions across business units.

What matters most is cross-functional coordination with clear roles and accountability.