- 1. What is the difference between shadow IT and shadow AI?

- 2. How does shadow AI happen?

- 3. What are some examples of shadow AI?

- 4. What are the primary risks of shadow AI?

- 5. How to determine how and when employees are allowed to use GenAI apps

- 6. How to protect against shadow AI in 5 steps

- 7. Top 5 myths and misconceptions about shadow AI

- 8. Shadow AI FAQs

- What is the difference between shadow IT and shadow AI?

- How does shadow AI happen?

- What are some examples of shadow AI?

- What are the primary risks of shadow AI?

- How to determine how and when employees are allowed to use GenAI apps

- How to protect against shadow AI in 5 steps

- Top 5 myths and misconceptions about shadow AI

- Shadow AI FAQs

What Is Shadow AI? How It Happens and What to Do About It

- What is the difference between shadow IT and shadow AI?

- How does shadow AI happen?

- What are some examples of shadow AI?

- What are the primary risks of shadow AI?

- How to determine how and when employees are allowed to use GenAI apps

- How to protect against shadow AI in 5 steps

- Top 5 myths and misconceptions about shadow AI

- Shadow AI FAQs

Shadow AI is the use of artificial intelligence tools or systems without the approval, monitoring, or involvement of an organization's IT or security teams.

It often occurs when employees use AI applications for work-related tasks without the knowledge of their employers, leading to potential security risks, data leaks, and compliance issues.

What is the difference between shadow IT and shadow AI?

Shadow IT refers to any technology—apps, tools, or services—used without approval from an organization's IT department. Employees often turn to shadow IT when sanctioned tools feel too slow, limited, or unavailable.

As noted earlier, Shadow AI is a newer, more specific trend. It involves using artificial intelligence tools—like ChatGPT or Claude—without formal oversight. While it shares the same unofficial nature as shadow IT, shadow AI introduces unique risks tied to how AI models handle data, generate outputs, and influence decisions.

The distinction matters. Shadow IT is mostly about unsanctioned access or infrastructure. Shadow AI is a GenAI security risk focused on unauthorized use of AI tools, which can directly impact security, compliance, and business outcomes in more unpredictable ways.

How does shadow AI happen?

Shadow AI happens when employees adopt generative AI tools on their own—without IT oversight or approval.

That might include using public tools like ChatGPT to summarize documents or relying on third-party AI plug-ins in workflows like design, development, or marketing.

In most cases, the intent isn't malicious. It's about getting work done faster. But because these tools fall outside sanctioned channels, they aren't covered by enterprise security, governance, or compliance controls.

It often starts with small decisions.

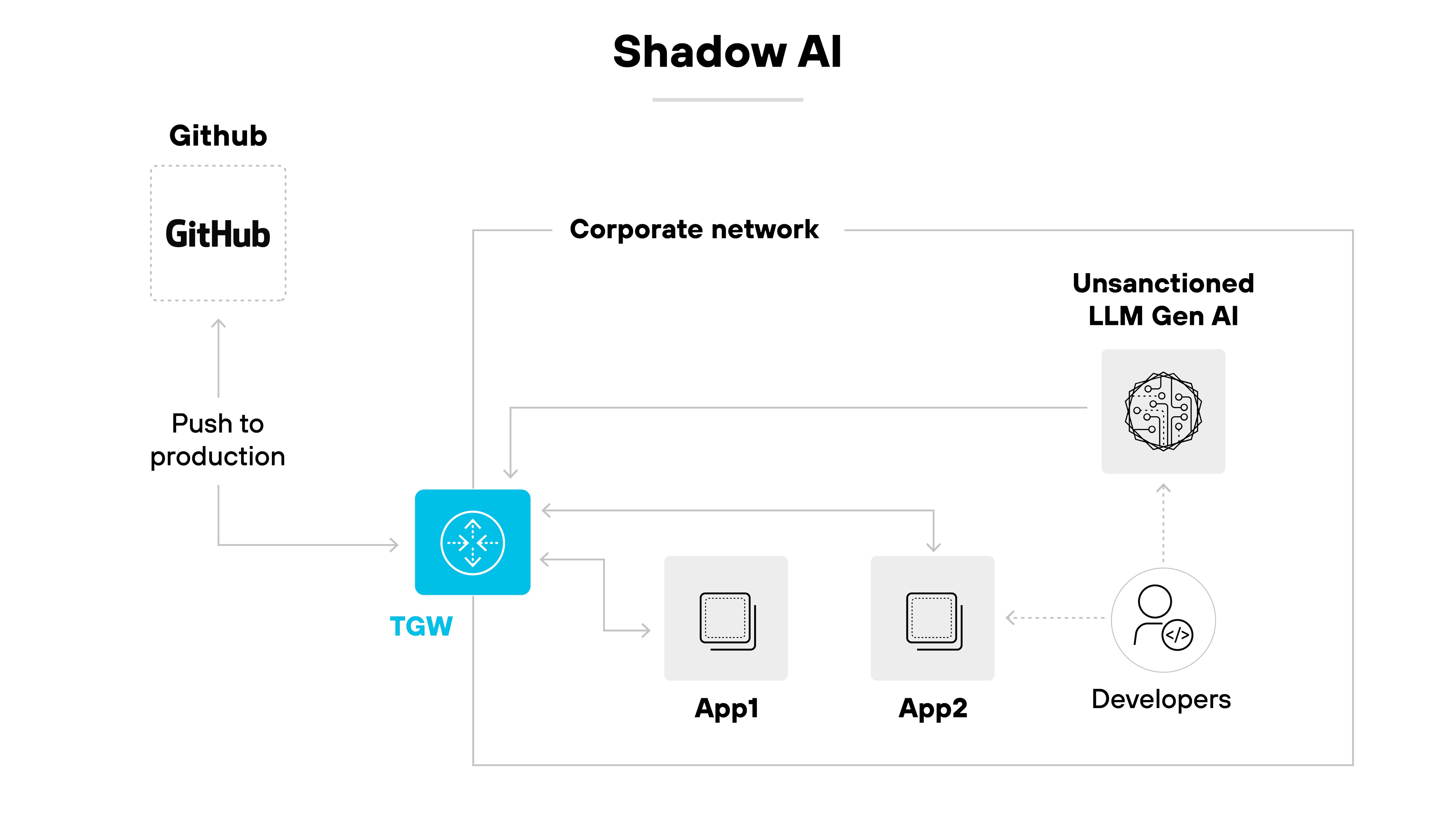

An employee might paste sensitive data into a chatbot while drafting a report. A team might use an open-source LLM API to build internal tools without notifying IT. Developers may embed GenAI features into apps or pipelines using services like Hugging Face or OpenRouter. Others might use personal accounts to log into SaaS apps that include embedded AI features.

These behaviors rarely go through procurement, security, or compliance review.

What makes this so common is how accessible AI tools have become. Many are free, browser-based, or built into existing platforms.

And because most organizations are still forming their governance approach, employees often act before formal guidance exists. Especially when centralized IT teams are stretched thin.

Here's the problem.

These tools often touch sensitive data. When used informally, they can introduce risks like data leakage, regulatory violations, or exposure to malicious models.

And because they're unsanctioned, IT and security teams usually don't even know they're in use. Let alone have a way to monitor or restrict them.

What are some examples of shadow AI?

Shadow AI appears in subtle ways that don't always register as security events. Until they do.

A product manager uses Claude to summarize an internal strategy deck before sharing it with a vendor. The deck includes unreleased timelines and partner names. No one reviews the output, and the prompt history remains on Anthropic's servers.

A developer builds a small internal chatbot that interfaces with customer data. They use OpenRouter to access a fast open-source LLM via API. The project never enters the security backlog because it doesn't require infrastructure changes.

A marketing designer uses Canva's AI image tools to generate campaign visuals from brand copy. The prompt contains product names, and the final files are exported and reused in web assets. The team assumes it's covered under the standard SaaS agreement, but never verifies it with procurement or legal.

Each example seems routine, even helpful. But the tools fall outside security's line of sight, and their behavior often goes untracked.

What are the primary risks of shadow AI?

Shadow AI introduces risk not just because of the tools being used, but because they operate outside of formal oversight.

“Shadow AI creates blind spots where sensitive data might be leaked or even used to train AI models.”

- Organizations saw on average 66 GenAI apps, with 10% classified as high risk.

- We observed an average 6.6 high-risk GenAI apps per company.

- GenAI-related DLP incidents increased more than 2.5X, now comprising 14% of all DLP incidents.

That means no monitoring, no enforcement, and no guarantee of compliance.

Here's what that leads to:

- Unauthorized processing of sensitive data: Employees may submit proprietary, regulated, or confidential data into external AI systems without realizing how or where that data is stored.

- Regulatory noncompliance: Shadow AI tools can bypass data handling requirements defined by laws like GDPR, HIPAA, or the DPDP Act. That opens the door to fines, investigations, or lawsuits.

- Expansion of the attack surface: These tools often introduce unsecured APIs, personal device access, or unmanaged integrations. Any of those can serve as an entry point for attackers.

- Lack of auditability and accountability: Outputs from shadow AI are usually not traceable. If something fails, there's no way to verify what data was used, how it was processed, or why a decision was made.

- Model poisoning and unvetted outputs: Employees may use external models that have been trained on corrupted data. That means the results can be biased, inaccurate, or even manipulated.

- Data leakage: Some tools store inputs or metadata on third-party servers. If an employee uses them to process customer information or internal code, that data may be exposed without anyone knowing.

- Overprivileged or insecure third-party access: Shadow AI systems may be granted broad permissions to speed up a task. But wide access without control is one of the fastest ways to lose visibility and open gaps.

That's the real issue with shadow AI. It's not just a rogue tool. It's an entire layer of activity that happens outside the systems built to protect the business.

Assess GenAI risks across your environment. Learn about the Unit 42 AI Security Assessment for GenAI Protection.

Learn moreHow to determine how and when employees are allowed to use GenAI apps

Most organizations don't start with a formal GenAI policy. Instead, they react to demand. Or to risk.

But waiting until something breaks doesn't work. The better approach is to decide ahead of time how and when GenAI apps are allowed.

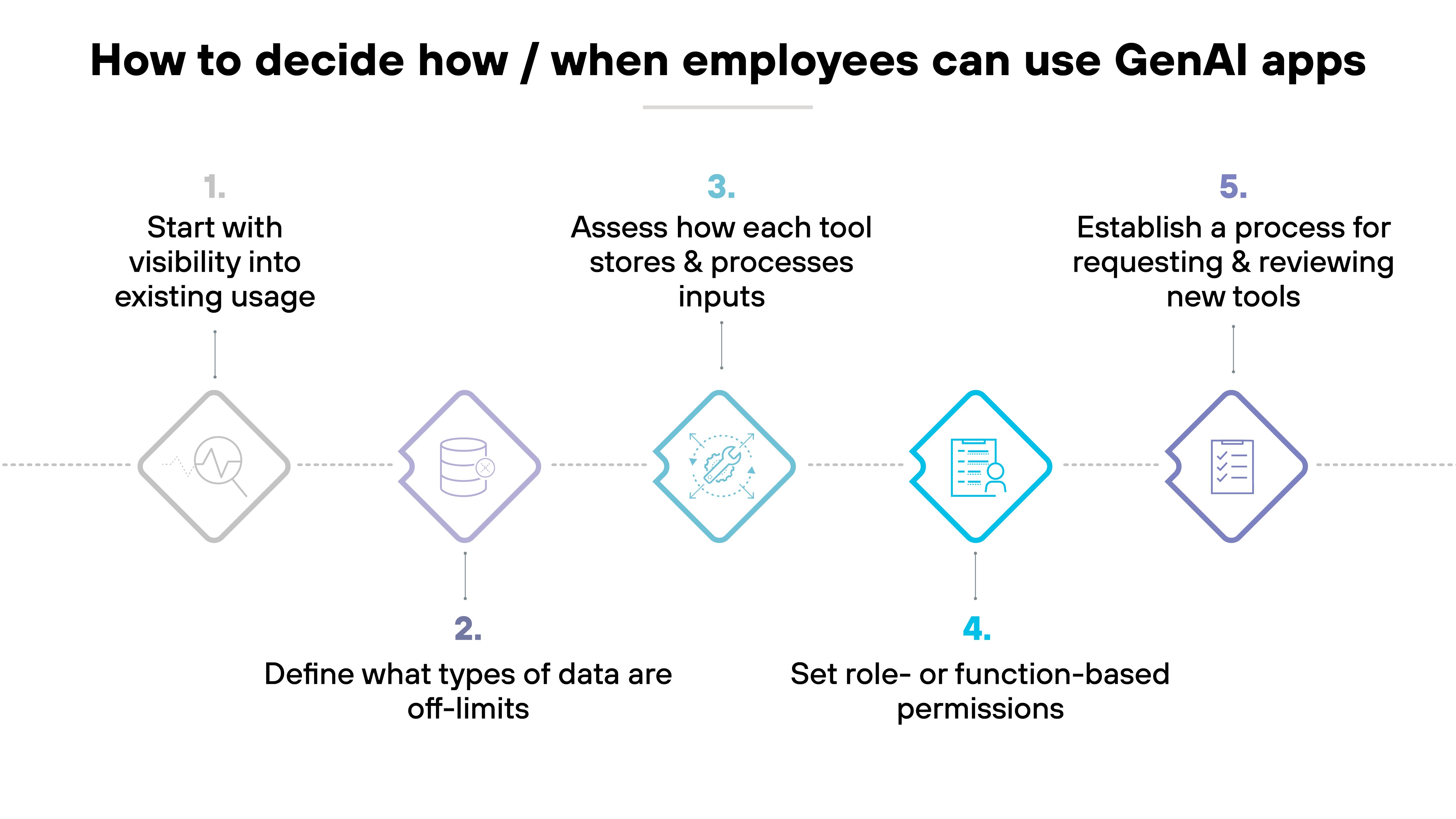

Here's how to break it down:

1. Start with visibility into existing usage

You can't set rules for what you don't know exists.

Shadow AI often starts with personal accounts, browser plug-ins, or app features that don't get flagged by traditional tooling. That's why the first step is discovering what's already in use.

Tools like endpoint logs, SaaS discovery platforms, or browser extension audits can help.

2. Define what types of data are off-limits

Not all data can be used with GenAI tools. Especially when the tools operate outside IT control.

Decide what categories of data should never be input, like customer records, source code, or regulated PII. That baseline helps set clear boundaries for all future decisions.

3. Assess how each tool stores and processes inputs

Each GenAI app handles data differently. Some store prompts. Others train on user input. And some keep nothing at all.

Review the vendor's documentation. Ask how long data is retained, whether it's shared with third parties, and if it's used for model improvement.

4. Set role- or function-based permissions

Not every team needs the same level of access.

Developers might need API-based tools for prototyping. Marketing teams might only need basic writing support.

Designate who can use what. And for what purpose. That makes enforcement easier and reduces policy friction.

5. Establish a process for requesting and reviewing new tools

Shadow AI isn't just about what's already in use. It's also about what comes next.

Employees will continue finding new apps.

Instead of blocking everything, create a lightweight review process. That gives teams a path to get tools approved. And helps security teams stay ahead.

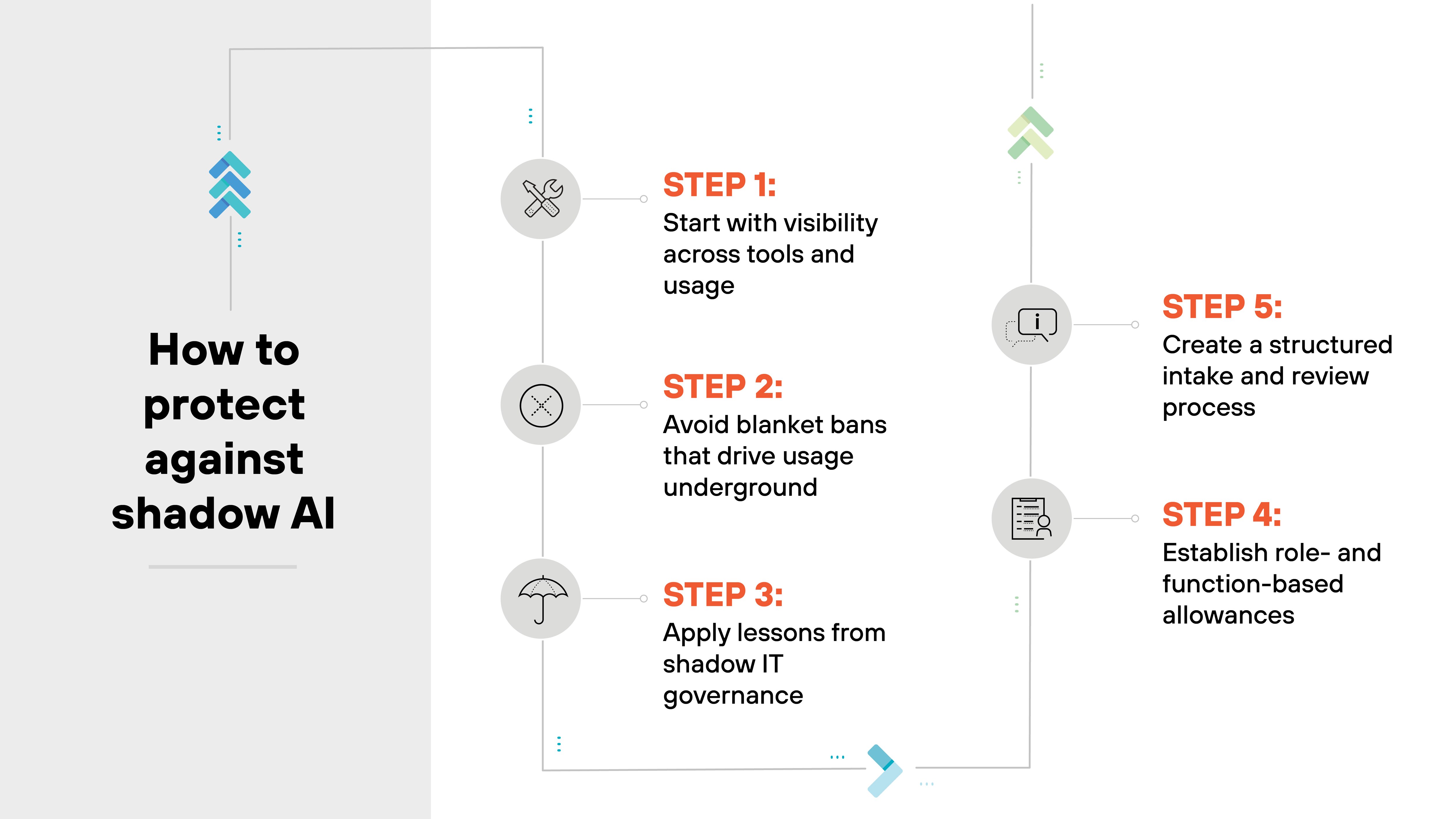

How to protect against shadow AI in 5 steps

Protecting against shadow AI means more than blocking tools. It means learning from what worked—and didn't—during the era of shadow IT.

Tools will always outpace policy. So the real focus needs to be on visibility, structure, and accountability.

Here's how to approach it:

Step 1: Start with visibility across tools and usage

Most organizations discover shadow AI use only after something goes wrong. But it's possible to get ahead of it.

Start with SaaS discovery tools, browser extension logs, and endpoint data.

Look for prompts sent to public LLMs, API connections to external models, and use of AI features in sanctioned apps. This helps establish a baseline. And gives security teams a place to start.

Step 2: Avoid blanket bans that drive usage underground

Some companies try to eliminate risk by banning all GenAI use. But that often backfires.

Employees will still use these tools—just in less visible ways. That means less oversight, not more.

Instead, focus on enabling safe usage. That includes clear rules about what kinds of data can be used, where tools are allowed, and what needs approval first.

Step 3: Apply lessons from shadow IT governance

Shadow IT showed that enforcement-only strategies don't work. What did work was creating lightweight approval processes, offering secure internal alternatives, and giving teams space to innovate without bypassing oversight.

Shadow AI benefits from the same model. Think: enablement plus guardrails. Not lockdowns.

Step 4: Establish role- and function-based allowances

One-size-fits-all policies tend to break. Instead, define GenAI permissions based on role, team function, or use case.

For example(s): Design teams might be cleared to use image generation tools under specific conditions. Developers might be allowed to use local LLMs for prototyping, but not customer data processing.

This keeps policy realistic and enforceable.

Step 5: Create a structured intake and review process

Employees will keep finding new tools. That's not the problem.

The problem is when there's no way to flag or evaluate them. Offer a simple, well-defined process for requesting GenAI tool reviews.

This doesn't have to be a full risk assessment. Just enough structure to capture usage, evaluate risk, and decide whether to approve, restrict, or sandbox.

- What Is AI Prompt Security? Secure Prompt Engineering Guide

- How to Build a Generative AI Security Policy

- DSPM for AI: Navigating Data and AI Compliance Regulations

Want to see how to gain visibility and control of all GenAI apps? Take the AI Access Security interactive tour.

Launch tourTop 5 myths and misconceptions about shadow AI

Shadow AI is a fast-moving topic, and it's easy to make assumptions. Especially when the tools feel familiar or harmless.

But not all common beliefs hold up. And misunderstanding the problem often leads to poor decisions around policy, risk, or enforcement.

Here are five myths worth clearing up:

Myth #1: Shadow AI only means unauthorized tools

Reality: Not always. Shadow AI includes any AI use that lacks IT oversight. Even if the tool itself is technically allowed.

For example: Using new GenAI features in an approved SaaS platform without going through an updated security review.

Myth #2: Banning AI tools stops shadow AI

Reality: It rarely works that way. Blocking public GenAI apps can actually push users toward more obscure or unmanaged alternatives.

That makes usage harder to track. And the risks are even harder to contain.

Myth #3: Shadow AI is always risky or malicious

Reality: Most shadow AI use starts from good intentions. Employees are trying to save time or be more productive.

The issue isn't motivation. It's that these actions bypass the normal review and approval process.

Myth #4: Shadow AI is easy to detect

Reality: Not necessarily. Employees might use AI plug-ins inside approved tools, or access GenAI features from personal accounts.

Without specific monitoring tools in place, a lot of shadow AI activity flies under the radar.

Myth #5: Shadow AI only matters in technical roles

Reality: Wrong. Shadow AI shows up in marketing, HR, design, operations. Any team trying to move fast or experiment.

And because these roles may not be security-focused, they're more likely to miss the risks.